| Are you ready for the next big thing in artificial intelligence? OpenAI’s GPT-4 is on the horizon, and it promises to be the most advanced language model yet. As we eagerly await its release, many are wondering how it will stack up against its groundbreaking predecessor, GPT-3. In this article, we’ll take a deep dive into the world of language models and explore the exciting new features and capabilities of GPT-4. From enhanced natural language processing to improved contextual understanding, we’ll show you everything you need to know about GPT-4 and how it compares to GPT-3. Whether you’re a tech enthusiast, a business leader, or simply curious about the latest developments in AI, this article is a must-read. So, let’s get started! GPT-3 GPT-3 (Generative Pre-trained Transformer 3) is a state-of-the-art, deep learning-based language model developed by OpenAI. It was introduced in May 2020 as the successor to the GPT-2 model. GPT-3 is considered a significant improvement over its predecessor in terms of size and performance. It is the largest language model to date, with 175 billion trainable parameters, and it is known for its ability to generate a wide range of outputs, including text, code, stories, and poems.The development of GPT-3 was a major breakthrough in natural language processing (NLP) and machine learning. It took over two years to develop and required massive computational resources, including hundreds of GPUs and access to vast amounts of data. The model was trained on a filtered version of Common Crawl consisting of 410 billion byte-pair-encoded tokens, along with other sources such as WebText2, Books1 and Books2, and Wikipedia. One of the unique features of GPT-3 is its ability to perform in Few-shot (FS), One-shot (1S), and Zero-shot (0S) settings. The Few-shot setting involves feeding the model between 10-100 shots, which are inputs and corresponding outputs. The One-shot setting is the same as the Few-shot setting, except that only one example/demo/context is fed to the model in addition to the last context (which is the task). The Zero-shot setting is when there is no context allowed except for the last (which is the task). This kind of setting is considered “unfairly hard” since it can be challenging even for humans to understand the task with no examples or demonstrations. GPT-3 has been used in over 300 applications across various industries, including productivity, education, creativity, and games. Its diverse capabilities have helped developers create new applications that were not possible before. The model has also raised concerns about its potential impact on the job market and society at large, as it can be used to automate many tasks that were previously performed by humans. GPT-3.5 GPT-3.5 is an enhanced version of GPT-3, which is a language processing model based on deep learning. GPT-3.5 is so-called because it is based on GPT-3, but with 1.3 billion fewer parameters, making it 100 times smaller than its predecessor. GPT-3.5 is sometimes referred to as InstructGPT because it includes a concept called “reinforcement learning with human feedback” (RLHF) in its training process. RLHF is a subfield of Artificial Intelligence (AI) that focuses on using human feedback to improve machine learning algorithms.GPT-3.5 was trained on a blend of text and code published before the end of 2021, and it is not able to access or process more recent data. It learned the relationships between sentences, words, and parts of words by ingesting huge amounts of data from the web, including hundreds of thousands of Wikipedia entries, social media posts, and news articles.OpenAI, the company behind GPT-3.5, used it to create several systems that are fine-tuned to achieve specific tasks. One of these systems is text-davinci-003, which is better at both long-form and high-quality writing than models built on GPT-3. It also has fewer limitations and scores higher on human preference ratings than InstructGPT, a family of GPT-3-based models released by OpenAI earlier in 2022. GPT-3.5 can be used for a variety of tasks, including digital content creation, writing and debugging code, and answering customer service queries. It is accessible via the OpenAI Playground, which provides a user interface for interacting with the model. Users can type in a request and adjust parameters like the model, temperature, and maximum length to see how the output varies. Additionally, GPT-3.5 can also assist in automating content creation and translation, summarizing texts, generating code, and even composing music. It has shown great potential in fields such as customer service, e-commerce, and digital marketing. GPT-4 Building on the remarkable language generation capabilities of its predecessor, GPT-3.5, OpenAI’s GPT-4 ushers in a new era of AI capabilities. This state-of-the-art model boasts significant enhancements in several key areas, including language understanding, context recognition, emotional intelligence, and domain-specific expertise. One of the most exciting innovations in GPT-4 is its ability to handle multimodal inputs, revolutionizing the way we interact with AI systems. Multimodal Input: A Breakthrough in AI Communication GPT-4’s multimodal input functionality is a groundbreaking feature that enables it to process and interpret a combination of text and images. This cutting-edge feature allows the AI model to analyze and understand prompts that include both textual and visual elements, making it more versatile and adaptable across various industries.With this capability, GPT-4 can analyze and understand a wide range of image and text types, including documents with embedded photographs, diagrams (both hand-drawn and digital), and screenshots. This functionality redefines the potential applications of AI and opens up new opportunities in industries such as healthcare, finance, and education. Improved Language Understanding and Context Recognition GPT-4 showcases a superior understanding of human language thanks to its advanced algorithms and extensive training dataset. It is adept at recognizing linguistic nuances, slang, and idiomatic expressions, making it more versatile and adaptable in different conversational settings.Furthermore, GPT-4’s refined context recognition capabilities enable it to maintain coherent and extended interactions, with the AI system remembering previous conversation points and avoiding irrelevant or repetitive responses. This feature enhances the user experience and makes interactions with AI systems more natural and intuitive. More Emotionally Intelligent AI Another groundbreaking feature of GPT-4 is its emotional intelligence, allowing it to recognize and respond to users’ emotions. By analyzing the tone, sentiment, and intention behind messages, GPT-4 generates empathetic and contextually appropriate responses, enhancing the overall communication experience and fostering more natural interactions between humans and AI systems. Domain-Specific Expertise GPT-4 can also be fine-tuned to develop expertise in specific domains, such as finance, healthcare, or law. By focusing on domain-specific data, GPT-4 offers specialized knowledge and terminology, making it an invaluable resource for businesses and organizations across various professional fields. Summing it up GPT-4 is a large multimodal model that can accept both text and image inputs and output human-like text. For example, it could upload a worksheet and output responses to the questions or read a graph and make calculations based on the data presented. The intellectual capabilities of GPT-4 are also improved, outperforming GPT-3.5 in a series of simulated benchmark exams. GPT-4 was unveiled on March 14, 2023, and OpenAI has yet to make its visual input capabilities available through any platform as the research company is collaborating with a single partner to start. However, you can access GPT-4’s text input capability through a subscription to ChatGPT Plus, which guarantees subscribers access to the language model at the price of $20 a month. GPT models have endless applications, including answering questions, summarizing text, translating text to other languages, generating code, and creating blog posts, stories, conversations, and other content types. GPT-3 Vs GPT-4: 5 Key Differences  Courtesy: Sam Szuchan Courtesy: Sam SzuchanIncreased Model Size One of the most notable differences between GPT-3 and GPT-4 is the increase in model size. GPT-3 currently has 175 billion parameters, making it one of the largest language models available. However, rumors suggest that GPT-4 could have as many as 10 trillion parameters. This increase in model size would allow GPT-4 to generate even more sophisticated and nuanced responses to complex queries. Enhanced Training Data Another significant difference between GPT-3 and GPT-4 could be the training data used to train the model. GPT-3 was trained on a massive dataset of over 45 terabytes of text sourced from the internet, books, and other written sources. However, GPT-4 may have access to even more diverse and extensive training data, including audio and video sources. This could allow GPT-4 to develop a deeper understanding of human language and generate more accurate and contextually relevant responses. Improved Accuracy While GPT-3.5 could handle 4,096 tokens or around 8,000 words, GPT-4 can process up to 32,768 tokens or around 64,000 words. This makes it more suitable for handling lengthy conversations and generating long-form content. GPT-4 scores 40% higher than GPT-3.5 on factuality evaluations and has significantly reduced hallucinations relative to previous models. It is also harder to trick into producing undesirable outputs such as hate speech and misinformation. Size and Complexity Another major difference between GPT-3 and GPT-4 is their size and complexity. GPT-3 has 175 billion parameters, making it one of the largest language models to date. However, GPT-4 is expected to be even bigger, with estimates ranging from 250 billion to 400 billion parameters. This increase in size would allow GPT-4 to have an even greater understanding of natural language and the ability to generate more complex and nuanced responses. Multilingual support GPT-4 outperforms GPT-3.5 and other language models by accurately answering thousands of multiple-choice questions across 26 languages. Users can expect chatbots based on GPT-4 to produce outputs with greater clarity and higher accuracy in their native languages.In addition to these improvements, GPT-4 also includes a feature that allows it to properly cite sources when creating text, which is a significant step forward in generating trustworthy and accurate content. Overall, GPT-4 promises to be a significant upgrade from GPT3 and GPT-3.5, and users can expect more. Conclusion As the AI landscape continues to evolve, it’s clear that language models like GPT-4 will play an increasingly important role in shaping our digital future. With its enhanced features and capabilities, it promises to be a major step forward from the highly successful GPT-3. From improved contextual understanding to more advanced language processing, GPT-4 represents a significant leap in AI technology. Whether it’s in the realm of virtual assistants, chatbots, or even content creation, GPT-4’s advanced capabilities promise to open up new possibilities and transform the way we interact with technology. We’re excited to see where this technology will take us, and we hope you are too. |

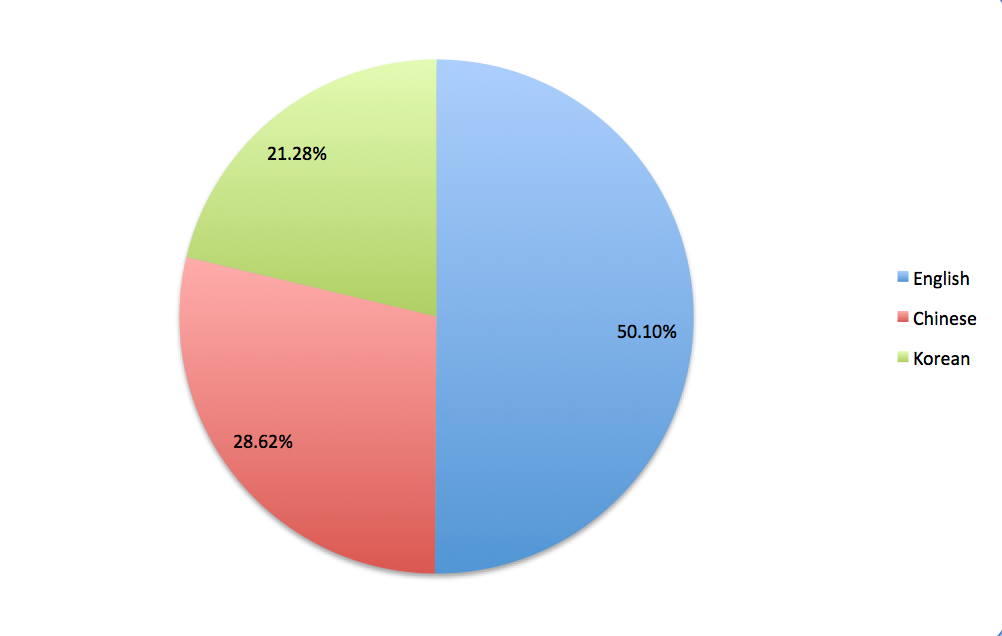

As of 2/7/2023

In Episode 1, in June ’21, I said the following…..

“From here on out, based upon my view of the ‘layers’ I’ve seen over the past 4 decades (throughout my career), cryptocurrency is, in my view, the Seventh Layer.

Cryptocurrency is no different, in that it requires every one of the previous 6 layers before it, or, it would not exist either.

There will be a layer 8 at some point, and, someone, somewhere already knows that it will be.“

Now, as we find ourselves 20 months later, in February ’23, the emergence of ‘Layer 8’ is absolutely clear.

https://www.cnbc.com/2023/o2/06/google-announces-bard-ai-in-response-to-chatgpt.html

It would be wise of you to visualize each of these major events as evolutionary layers of a strategic and permanent basis, of evolution, rather than viewing these successive architectural layers as independent, or, unrelated entities.

It’s a war!

GTP-4 Is Coming: A Look into The Future of AI

An overview of hints and expectations about GPT-4 and what the OpenAI CEO recently said about it.

This is a short audio preview of Episode 8, Carbon Credits and Crypto. It is being suggested that this could be one of the most lucrative investment opportunities of the next decade, due to the still relatively unknown, and, massive impact of mandatory compliance for this market by multi-national firms around the globe.

Here is a short preview of Episode 7, Bitcoin and Beyond Miami 2022.

The 4-day Bitcoin 2022 Conference included an Industry Day for speaker panels and conferences, as well as a Sound Money Festival on the last day with live performances, entertainment, and other giveaways for attendees. Attendees had in-person access to thousands of global decision makers, increased their brand’s visibility, received important face time with clients and partners, and celebrated one of the world’s fastest-growing technologies.